Third Edition

Copyright © 2019 Packt Publishing

All rights reserved. No part of this book may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, without the prior written permission of the publisher, except in the case of brief quotations embedded in critical articles or reviews.

Every effort has been made in the preparation of this book to ensure the accuracy of the information presented. However, the information contained in this book is sold without warranty, either express or implied. Neither the author, nor Packt Publishing or its dealers and distributors, will be held liable for any damages caused or alleged to have been caused directly or indirectly by this book.

Packt Publishing has endeavored to provide trademark information about all of the companies and products mentioned in this book by the appropriate use of capitals. However, Packt Publishing cannot guarantee the accuracy of this information.

Commissioning Editor:

Aaron Lazar

Acquisition Editor:

Denim Pinto

Content Development Editor:

Zeeyan Pinheiro

Technical Editor:

Romy Dias

Copy Editor:

Safis Editing

Project Coordinator:

Vaidehi Sawant

Proofreader:

Safis Editing

Indexer:

Mariammal Chettiyar

Graphics:

Alishon Mendonsa

Production Coordinator:

Deepika Naik

First published: June 2016

Second edition: December 2017

Third edition: February 2019

Production reference: 1220219

Published by Packt Publishing Ltd.

Livery Place

35 Livery Street

Birmingham

B3 2PB, UK.

ISBN 978-1-78953-072-8

Mapt is an online digital library that gives you full access to over 5,000 books and videos, as well as industry leading tools to help you plan your personal development and advance your career. For more information, please visit our website.

Spend less time learning and more time coding with practical eBooks and Videos from over 4,000 industry professionals

Improve your learning with Skill Plans built especially for you

Get a free eBook or video every month

Mapt is fully searchable

Copy and paste, print, and bookmark content

Did you know that Packt offers eBook versions of every book published, with PDF and ePub files available? You can upgrade to the eBook version at www.packt.com and as a print book customer, you are entitled to a discount on the eBook copy. Get in touch with us at customercare@packtpub.com for more details.

At www.packt.com , you can also read a collection of free technical articles, sign up for a range of free newsletters, and receive exclusive discounts and offers on Packt books and eBooks.

Sourabh Sharma has over 16 years of experience in product/application development. His expertise lies in designing, developing, deploying, and testing N-tier web applications and leading teams. He loves to troubleshoot complex problems and develop innovative ways to solve problems. Sourabh believes in continuous learning and sharing your knowledge.

Aristides Villarreal Bravo is a Java developer, member of the NetBeans Dream Team and the Java User Groups community, and a developer of the jmoordb framework. He is currently residing in Panama. He has organized and participated in various conferences and seminars related to Java, Java EE, NetBeans, the NetBeans Platform, open source software, and mobile devices, both nationally and internationally. He is a writer of tutorials and blogs for web developers about Java and NetBeans. He has participated in several interviews on sites including NetBeans, NetBeans Dzone, and JavaHispano. He is a developer of plugins for NetBeans.

If you're interested in becoming an author for Packt, please visit authors.packtpub.com and apply today. We have worked with thousands of developers and tech professionals, just like you, to help them share their insight with the global tech community. You can make a general application, apply for a specific hot topic that we are recruiting an author for, or submit your own idea.

Presently, microservices are the de-facto way to design scalable, easy-to-maintain applications. Microservice-based systems not only make application development easier, but also offer great flexibility in utilizing various resources optimally. If you want to build an enterprise-ready implementation of a microservice architecture, then this is the book for you!

Starting off by understanding the core concepts and framework, you will then focus on the high-level design of large software projects. You will gradually move on to setting up the development environment and configuring it, before implementing continuous integration to deploy your microservice architecture. Using Spring Security, you will secure microservices and integrate sample online table reservation system ( OTRS ) services with an Angular-based UI app. We'll show you the best patterns, practices, and common principles of microservice design, and you'll learn to troubleshoot and debug the issues faced during development. We'll show you how to design and implement event-based and gRPC microservices. You will learn various ways to handle distributed transactions and explore choreography and orchestration of business flows. Finally, we'll show you how to migrate a monolithic application to a microservice-based application.

By the end of the book, you will know how to build smaller, lighter, and faster services that can be implemented easily in a production environment.

This book is designed for Java developers who are familiar with microservice architecture and now want to effectively implement microservices at an enterprise level. A basic knowledge of Java and Spring Framework is necessary.

Chapter 1 , A Solution Approach , starts with basic questions about the existence of microservices and how they evolve. It highlights the problems that large-scale on-premises and cloud-based products face, and how microservices deal with them. It also explains the common problems encountered during the development of enterprise or large-scale applications, and the solutions to these problems. Many of you might have experienced the pain of rolling out the whole release due to failure of one feature.

Microservices give the flexibility to roll back only those features that have failed. This is a very flexible and productive approach. For example, let's assume you are the member of an online shopping portal development team and want to develop an application based on microservices. You can divide your application based on different domains such as products, payments, cart, and so on, and package all these components as a separate package. Once you deploy all these packages separately, these would act as a single component that can be developed, tested, and deployed independently—these are called microservices.

Now let's see how this helps you. Let's say that after the release of new features, enhancements, and bug fixes, you find flaws in the payment service that need an immediate fix. Since the architecture is based on microservices, you can roll back just the payment service, instead of rolling back the whole release. You could also apply the fixes to the payment microservice without affecting the other services. This not only allows you to handles failure properly, but helps to deliver features/fixes swiftly to the customer.

Chapter 2 , Environment Setup , teaches you how to set up the development environment from an integrated development environment ( IDE ), and looks at other development tools from different libraries. This chapter covers everything from creating a basic project, to setting up Spring Boot configuration, to building and developing our first microservice. Here, we'll use Java 11 as our language and Jetty as our web server.

Chapter 3 , Domain-Driven Design , sets the tone for rest of the chapters by referring to one sample project designed using domain-driven design. This sample project is used to explain different microservice concepts from this chapter onward. This chapter uses this sample project to drive through different functional and domain-based combinations of services or apps to explain domain-driven design.

Chapter 4 , Implementing a Microservice , takes you from the design to the implementation of a sample project. Here, the design of our sample project explained in the last chapter is used to build the microservices. This chapter not only covers the coding, but also other different aspects of the microservices—build, unit testing, and packaging. At the end of this chapter, the sample microservice project will be ready for deployment and consumption.

Chapter 5 , Microservice Pattern – Part 1 , elaborates upon the different design patterns and why these are required. You'll learn about service discovery, registration, configuration, how these services can be implemented, and why these services are the backbone of microservice architecture. During the course of microservice implementation, you'll also explore Netflix OSS components, which have been used for reference implementation.

Chapter 6

,

Microservice Pattern – Part 2

, continues from the first chapter on microservice patterns. You'll learn about the API Gateway pattern and its implementation. Failures are bound to happen, and a successful system design prevents the failure of the entire system due to one component failure. We'll learn about the circuit breaker, its implementation, and how it acts as a safeguard against service failure.

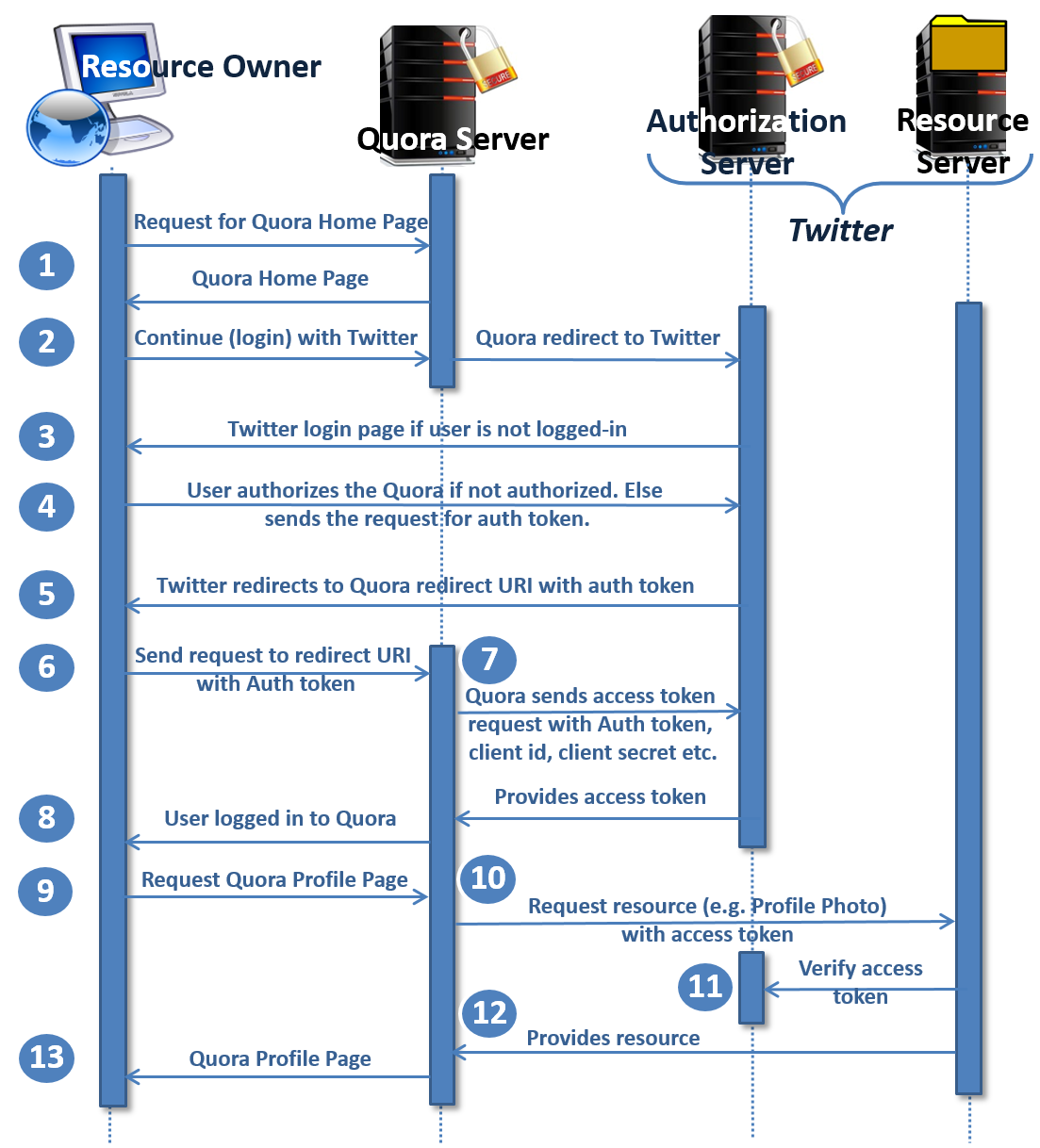

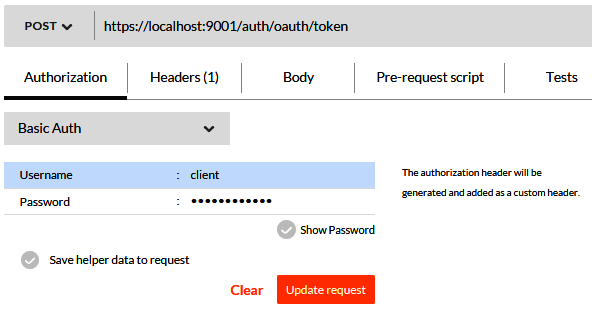

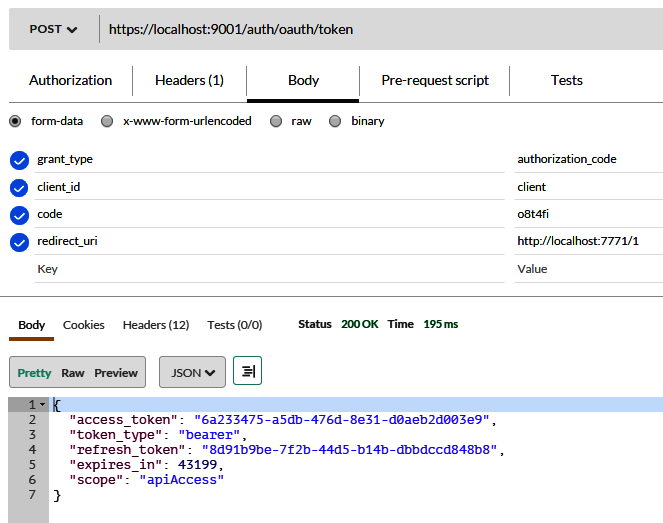

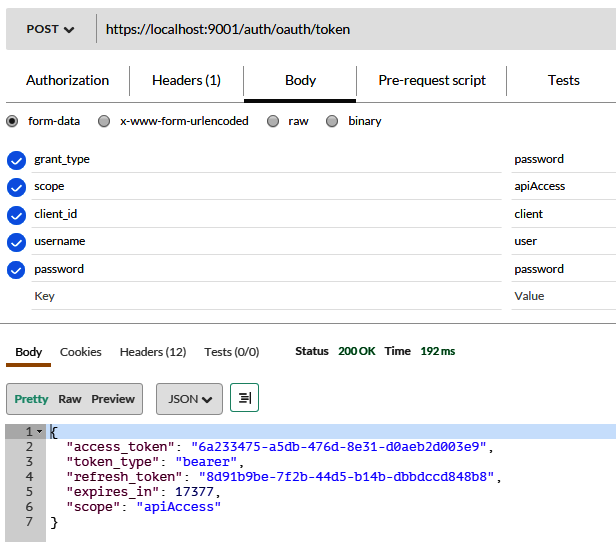

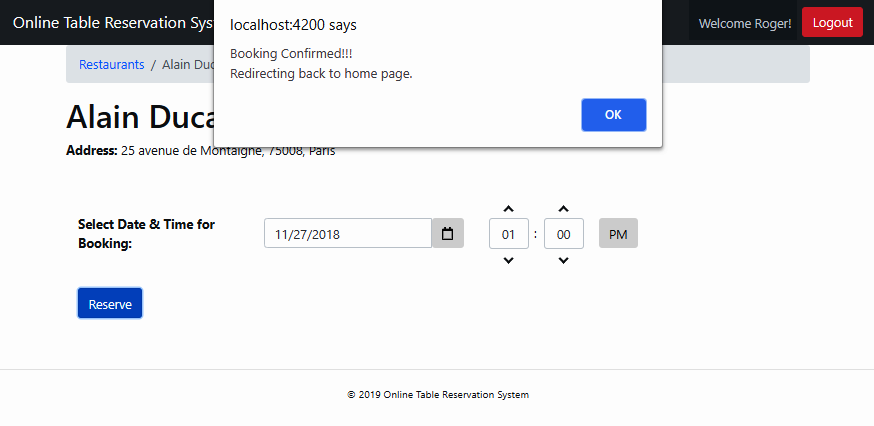

Chapter 7 , Securing Microservices , explains how to secure microservices with respect to authentication and authorization. Authentication is explained using basic authentication and authentication tokens. Similarly, authorization is examined using Spring Security 5.0. This chapter also explains common security problems and their solutions.

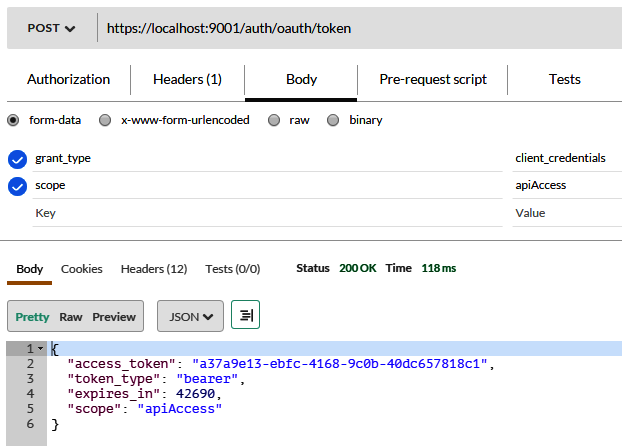

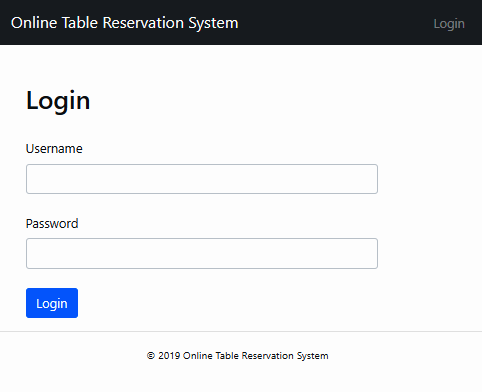

Chapter 8 , Consuming Microservices Using the Angular App , explains how to develop a web application using AngularJS to build the prototype of a web application that will consume microservices to show the data and flow of a sample project – a small utility project.

Chapter 9 , Inter-Process Communication Using REST , explains how REST can be used for inter-process communication. The use of RestTemplate and the Feign client for implementing inter-process communication is also considered. Lastly, it examines the use of load balanced calls to services where more than one instance of a service is deployed in the environment.

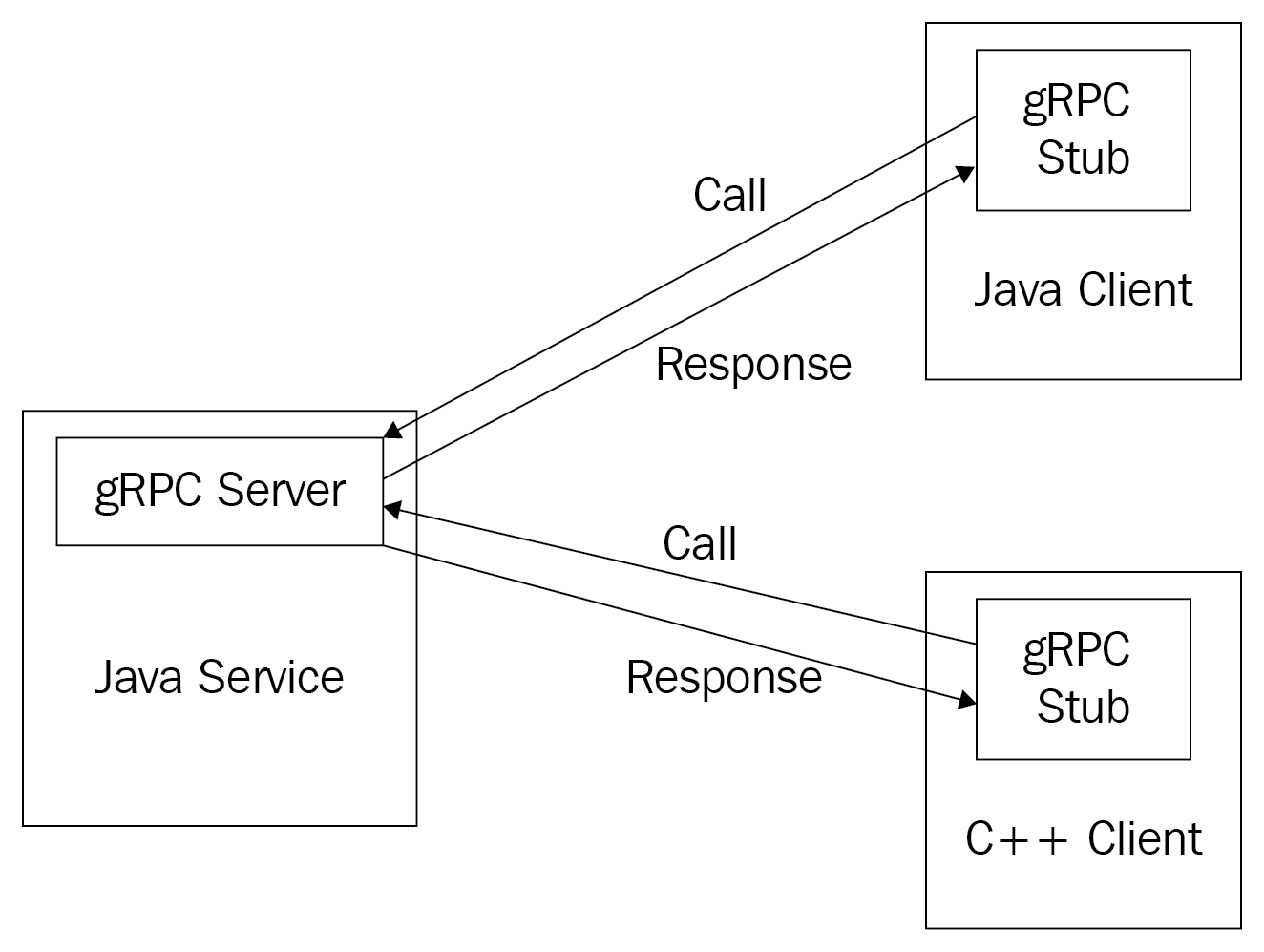

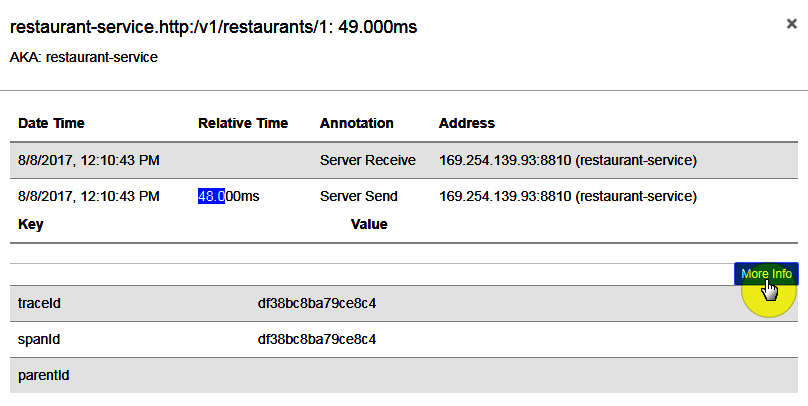

Chapter 10 , Inter-Process Communication Using gRPC , explains how to implement gRPC services and how these can be used for inter-process communication.

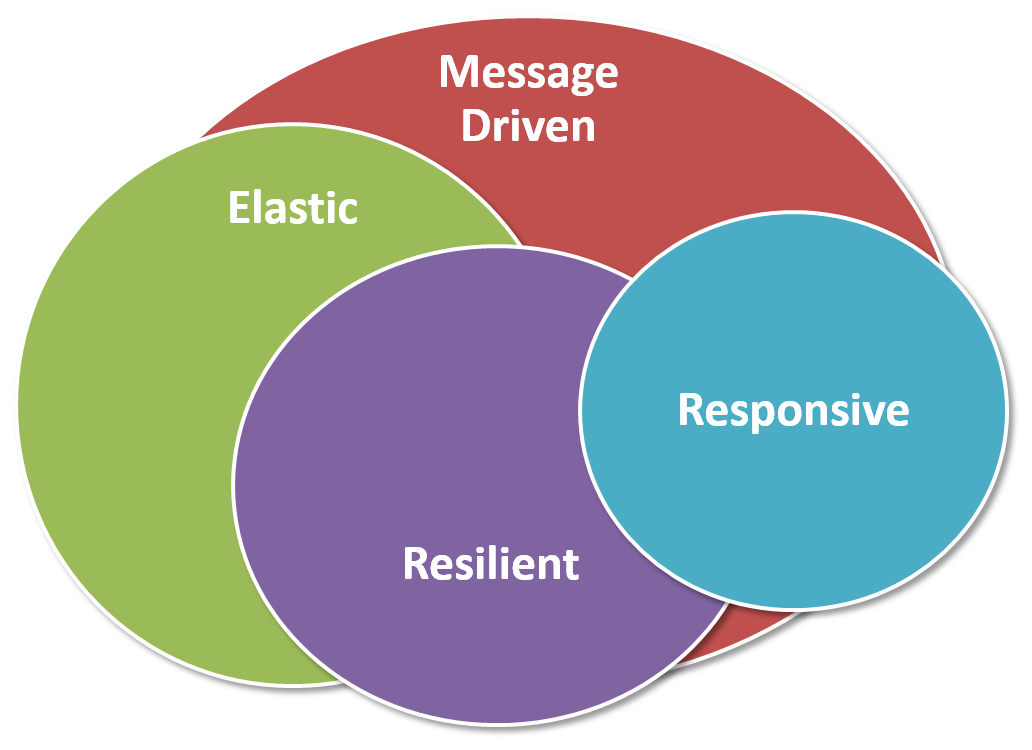

Chapter 11 , Inter-Process Communication Using Events , discusses reactive microservices and their fundamentals. It outlines the difference between plain microservices and reactive microservices. At the end, you'll learn how to design and implement a reactive microservice.

Chapter 12 , Transaction Management , teaches you about the problem of transaction management when a transaction involves multiple microservices, and a call when routed through various services. We’ll discuss the two-phase commit and distributed saga patterns, and resolve the transaction management problem with a distributed saga implementation.

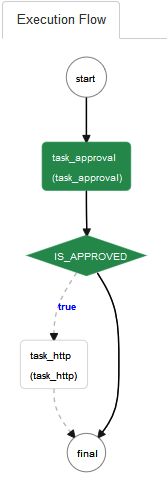

Chapter 13 , Service Orchestration , introduces you to different designs for establishing inter-process communication among services for specific flows or processes. You’ll learn about choreography and orchestration. You will also learn about using Netflix Conductor to implement the orchestration.

Chapter 14

,

Troubleshooting Guide

, talks about scenarios when you may encounter issues and get stuck. This chapter explains the most common problems encountered during the development of microservices, along with their solutions. This will help you to follow the book smoothly and will make learning swift.

Chapter 15 , Best Practices and Common Principles , teaches the best practices and common principles of microservice design. It provides details about microservices development using industry practices and examples. This chapter also contains a few examples where microservice implementation can go wrong, and how you can avoid such problems.

Chapter 16 , Converting a Monolithic App to a Microservices-Based App , shows you how to migrate a monolithic application to a microservice-based application.

You need to have a basic knowledge of Java and Spring Framework. You can explore the reference links given at the end of each chapter to get the more out of this book.

For this book, you can use any operating system (out of Linux, Windows, or macOS) with a minimum of 4 GB RAM. You will also require NetBeans with Java, Maven, Spring Boot, Spring Cloud, Eureka Server, Docker, and a continuous integration/continuous deployment application. For Docker containers, you may need a separate virtual machine or cloud host, preferably with 16 GB or more of RAM.

You can download the example code files for this book from your account at www.packt.com . If you purchased this book elsewhere, you can visit www.packt.com/support and register to have the files emailed directly to you.

You can download the code files by following these steps:

Once the file is downloaded, please make sure that you unzip or extract the folder using the latest version of:

The code bundle for the book is also hosted on GitHub at https://github.com/PacktPublishing/Mastering-Microservices-with-Java-Third-Edition . In case there's an update to the code, it will be updated on the existing GitHub repository.

We also have other code bundles from our rich catalog of books and videos available at https://github.com/PacktPublishing/ . Check them out!

We also provide a PDF file that has color images of the screenshots/diagrams used in this book. You can download it here: https://www.packtpub.com/sites/default/files/downloads/9781789530728_ColorImages.pdf .

There are a number of text conventions used throughout this book.

CodeInText : Indicates c ode words in text, database table names, folder names, filenames, file extensions, pathnames, dummy URLs, user input, and Twitter handles. Here is an example: " First, we'll add Spring Cloud dependencies, as shown in pom.xml . "

A block of code is set as follows:

logging:

level:

ROOT: INFO

org.springframework.web: INFO

When we wish to draw your attention to a particular part of a code block, the relevant lines or items are set in bold:

endpoints:

restart:

enabled: true

shutdown:

enabled: true

Any command-line input or output is written as follows:

Chapter6> mvn clean package

Bold : Indicates a new term, an important word, or w ords that you see onscreen. For example, words in menus or dialog boxes appear in the text like this. Here is an example: "After the values are updated, click on the Save and Test button. "

Feedback from our readers is always welcome.

General feedback : If you have questions about any aspect of this book, mention the book title in the subject of your message and  email us at customercare@packtpub.com .

Errata : Although we have taken every care to ensure the accuracy of our content, mistakes do happen. If you have found a mistake in this book, we would be grateful if you would report this to us. Please visit www.packt.com/submit-errata , selecting your book, clicking on the Errata Submission Form link, and entering the details.

Piracy : If you come across any illegal copies of our works in any form on the Internet, we would be grateful if you would provide us with the location address or website name. Please contact us at copyright@packt.com with a link to the material.

If you are interested in becoming an author : If there is a topic that you have expertise in and you are interested in either writing or contributing to a book, please visit authors.packtpub.com .

Please leave a review. Once you have read and used this book, why not leave a review on the site that you purchased it from? Potential readers can then see and use your unbiased opinion to make purchase decisions, we at Packt can understand what you think about our products, and our authors can see your feedback on their book. Thank you!

For more information about Packt, please visit packt.com .

The following part of this book will teach you about the fundamentals of microservices and the basics that you need in order to implement microservice-based systems.

In this section, we will cover the following chapters:

As a prerequisite for proceeding with this book, you should have a basic understanding of microservices and different software architecture styles. Having a basic understanding of these will help you understand what we discuss in this book.

After reading this book, you will be able to implement microservices for on-premises or cloud production deployments and you will understand the complete life cycle, from design and development to testing and deployment, of continuous integration and deployment. This book is specifically written for practical use and to stimulate your mind as a solution architect. Your learning will help you to develop and ship products in any situation, including Software-as-a-Service ( SaaS ) and Platform-as-a-Service ( PaaS ) environments. We'll primarily use Java and Java-based framework tools, such as Spring Boot and Jetty, and we will use Docker for containerization.

In this chapter, you will learn about microservices and how they have evolved. This chapter highlights the problems that on-premises and cloud-based products face and how microservices architectures deal with them. It also explains the common problems encountered during the development of SaaS, enterprise, or large applications and their solutions.

In this chapter, we will explore the following topics:

Martin Fowler explains the following:

Let's get some background on the way microservices have evolved over the years. Enterprise architecture evolved from historic mainframe computing, through client-server architecture (two-tier to n -tier), to SOA.

The transformation from SOA to microservices is not a standard defined by an industry organization, but a practical approach practiced by many organizations. SOA eventually evolved to become microservices .

Adrian Cockcroft, a former Netflix architect, describes a microservice-based architecture as follows:

Similarly, the following quote from Mike Gancarz, a member who designed the X Windows system, which defines one of the paramount precepts of Unix philosophy, describes the microservice paradigm as well:

Microservice architectures share many common characteristics with SOAs, such as the focus on services and how one service decouples from another. SOA evolved around monolithic application integration by exposing APIs that were mostly Simple Object Access Protocol ( SOAP )-based. Therefore, having middleware such as an enterprise service bus ( ESB ) is very important for SOA. M icroservices are less complex than SOAs, and, even though they may use a message bus, it is only used for message transport and it does not contain any logic. It is simply based on smart endpoints.

Tony Pujals defined m icroservices beautifully:

Though Tony only talks about HTTP, event-driven microservices may use a different protocol for communication. You can make use of Kafka to implement event-driven microservices. Kafka uses the wire protocol, a binary protocol over TCP.

Microservices are not new—they have been around for many years. For example, Stubby, a general purpose infrastructure based on Remote Procedure Call ( RPC ), was used in Google data centers in the early 2000s to connect a number of services with and across data centers. Its recent rise is due to its popularity and visibility. Before microservices became popular, monolithic architectures were mainly being used for developing on-premises and cloud-based applications.

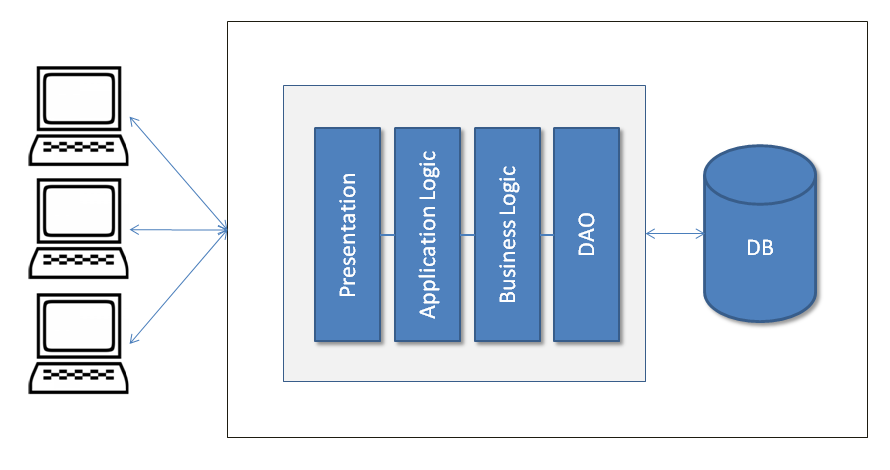

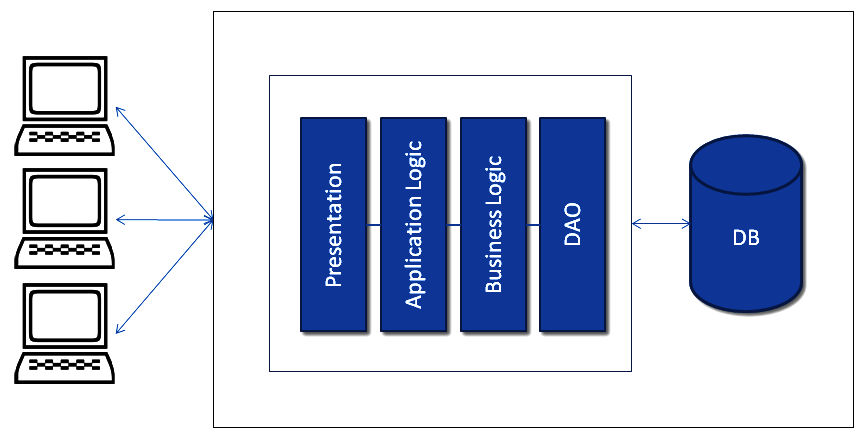

A monolithic architecture allows the development of different components such as presentation, application logic, business logic, and Data Access Objects ( DAOs ), and then you either bundle them together in an Enterprise Archive ( EAR ) or a Web Archive ( WAR ), or store them in a single directory hierarchy (such as Rails or Node.js).

Many famous applications, such as Netflix, have been developed using a microservices architecture. Moreover, eBay, Amazon, and Groupon have evolved from monolithic architectures to microservices architectures.

Now that you have had an insight into the background and history of microservices , let's discuss the limitations of a traditional approach—namely, monolithic application development—and see how microservices would address them.

As we know, change is eternal. Humans always look for better solutions. This is how microservices became what it is today and it will evolve further in the future. Today, organizations are using agile methodologies to develop applications—it is a fast-paced development environment that has grown to a much larger scale after the invention of the cloud and distributed technologies. Many argue that monolithic architectures could also serve a similar purpose and be aligned with agile methodologies, but microservices still provide a better solution to many aspects of production-ready applications.

To understand the design differences between monolithic and microservices architectures, let's take an example of a restaurant table-booking application. This application may have many services to do with customers, bookings, analytics, and so on, as well as regular components, such as presentation and databases.

We'll explore three different designs here: the traditional monolithic design, the monolithic design with services, and the microservices design.

The following diagram explains the traditional monolithic application design. This design was widely used before SOA became popular:

In a traditional monolithic design, everything is bundled in the same archive (all the presentation code is bundled in with the Presentation archive, the application logic goes into the Application Logic archive, and so on), regardless of how it all interacts with the database files or other sources.

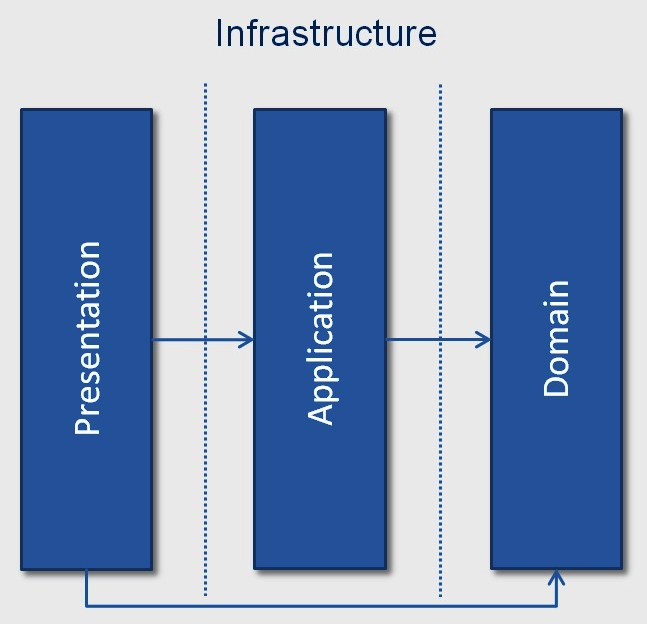

After SOA, applications started being developed based on services, where each component provides services to other components or external entities. The following diagram depicts a monolithic application with different services; here, services are being used with a Presentation component. All services, the Presentation component, or any other components are bundled together:

The following diagram depicts the microservices design . Here each component is autonomous. Each component could be developed, built, tested, and deployed independently. Here, even the application User Interface ( UI ) component could also be a client and consume the microservices . For the purpose of our example, the layer designed is used within the µService.

The API Gateway provides an interface where different clients can access the individual services and solve various problems, such as what to do when you want to send different responses to different clients for the same service. For example, a booking service could send different responses to a mobile client (minimal information) and a desktop client (detailed information), providing different details to each, before providing something different again to a third-party client.

A response may require the fetching of information from two or more services:

After observing all the sample design diagrams we've just gone through, which are very high-level designs, you might find that in a monolithic design, the components are bundled together and tightly coupled. All the services are part of the same bundle. Similarly, in the second design diagram, you can see a variant of the first diagram where all services could have their own layers and form different APIs, but, as shown in the diagram, these are also all bundled together.

Conversely, in the microservices design , the design components are not bundled together and have loose couplings. Each service has its own layers and database, and is bundled in a separate archive to all others. All these deployed services provide their specific APIs, such as Customers or Bookings. These APIs are ready to consume. Even the UI is also deployed separately and designed using µServices. For this reason, the microservices provides various advantages over its monolithic counterpart. I would, nevertheless, remind you that there are some exceptional cases where monolithic application development is highly successful, such as Etsy, and peer-to-peer e-commerce web applications.

Now let us discuss the limitations you'd face while working with Monolithic applications.

Monolithic applications that are large when scaled, scale everything, as all the components are bundled together. For example, in the case of a restaurant table reservation application, even if you would like to scale only the table-booking service, you would scale the whole application; you cannot scale the table-booking service separately. This design does not utilize resources optimally.

In addition, this scaling is one-dimensional. Running more copies of the application provides the scale with increasing transaction volume. An operation team could adjust the number of application copies that were using a load balancer based on the load in a server farm or a cloud. Each of these copies would access the same data source, therefore increasing the memory consumption, and the resulting I/O operations make caching less effective.

Microservices architectures give the flexibility to scale only those services where scale is required and allow optimal utilization of resources. As mentioned previously, when needed, you can scale just the table-booking service without affecting any of the other components. It also allows two-dimensional scaling; here we can not only increase the transaction volume, but also the data volume using caching (platform scale). A development team can then focus on the delivery and shipping of new features, instead of worrying about the scaling issues (product scale).

Microservices could help you scale platforms, people, and product dimensions, as we have seen previously. People scaling here refers to an increase or decrease in team size depending on the microservices ' specific development needs.

Microservice development using RESTful web service development provides scalability in the sense that the server-end of REST is stateless; this means that there is not much communication between servers, which makes the design horizontally scalable.

Since monolithic applications are either bundled in the same archive or contained in a single directory, they prevent the deployment of code modularity. For example, many of you may have experienced the pain of delaying rolling out the whole release due to the failure of one feature.

To resolve these situations, microservices give us the flexibility to roll back only those features that have failed. It's a very flexible and productive approach. For example, let's assume you are the member of an online shopping portal development team and want to develop an application based on microservices . You can divide your application based on different domains such as products, payments, cart, and so on, and package all these components as separate packages. Once you have deployed all these packages separately, these would act as single components that can be developed, tested, and deployed independently, and called µService .

Now, let's see how that helps you. Let's say that after a production release launching new features, enhancements, and bug fixes, you find flaws in the payment service that need an immediate fix. Since the architecture you have used is based on microservices , you can roll back the payment service instead of rolling back the whole release, if your application architecture allows, or apply the fixes to the microservices payment service without affecting the other services. This not only allows you to handle failure properly, but it also helps to deliver the features/fixes swiftly to a customer.

Monolithic applications are mostly developed and enhanced based on the technologies primarily used during the initial development of a project or a product. This makes it very difficult to introduce new technology at a later stage of development or once the product is in a mature state (for example, after a few years). In addition, different modules in the same project that depend on different versions of the same library make this more challenging.

Technology is improving year on year. For example, your system might be designed in Java and then, a few years later, you may want to develop a new service in Ruby on Rails or Node.js because of a business need or to utilize the advantages of new technologies. It would be very difficult to utilize the new technology in an existing monolithic application.

It is not just about code-level integration, but also about testing and deployment. It is possible to adopt a new technology by rewriting the entire application, but it is a time-consuming and risky thing to do.

On the other hand, because of its component-based development and design, microservices architectures give us the flexibility to use any technology, new or old, for development. They do not restrict you to using specific technologies, and give you a new paradigm for your development and engineering activities. You can use Ruby on Rails, Node.js, or any other technology at any time.

So, how is this achieved? Well, it's very simple. M icroservices -based application code does not bundle into a single archive and is not stored in a single directory. Each µService has its own archive and is deployed separately. A new service could be developed in an isolated environment and could be tested and deployed without any technical issues. As you know, microservices also own their own separate processes, serving their purpose without any conflicts to do with things such as shared resources with tight coupling, and processes remain independent.

Monolithic systems does not provide flexibility to introduce new technology. However, introduction of new technology comes as low risk features in microservices based system because by default these small and self contained components.

You can also make your microservice available as open source software so it can be used by others, and, if required, it may interoperate with a closed source, a proprietary one, which is not possible with monolithic applications.

There is no question that monolithic applications can be developed using agile practices, and these are being developed all the time. Continuous integration (CI) and continuous deployment (CD) could be used, but the question is—do they use agile practices effectively? Let's examine the following points:

The preceding issues can be tackled very easily by microservices . For example, UI developers may have their own UI component that can be developed, built, tested, and deployed separately. Similarly, other microservices might also be deployable independently and, because of their autonomous characteristics, the risk of system failure is reduced. Another advantage for development purposes is that UI developers can make use of JSON objects and mock Ajax calls to develop the UI, which can be taken up in an isolated manner. After development is finished, developers can consume the actual APIs and test the functionality. To summarize, you could say that microservices development is swift and it aligns well with the incremental needs of businesses.

Generally, large monolithic application code is the toughest to understand for developers, and it takes time before a new developer can become productive. Even loading the large monolithic application into an integrated development environment ( IDE ) is troublesome, as it makes the IDE slower and the developer less productive.

A change in a large monolithic application is difficult to implement and takes more time due to the large code base, and there can also be a high risk of bugs if impact analysis is not done properly and thoroughly. Therefore, it becomes a prerequisite for developers to do a thorough impact analysis before implementing any changes.

In monolithic applications, dependencies build up over time as all components are bundled together. Therefore, the risk associated with code changes rises exponentially as the amount of modified lines of code grows.

When a code base is huge and more than 100 developers are working on it, it becomes very difficult to build products and implement new features because of the previously mentioned reason. You need to make sure that everything is in place, and that everything is coordinated. A well-designed and documented API helps a lot in such cases.

Netflix, the on-demand internet streaming provider, had problems getting their application developed, with around 100 people working on it. Then, they used a cloud service and broke up the application into separate pieces. These ended up being microservices. Microservices grew from the desire for speed and agility and to deploy teams independently.

Microcomponents are made loosely coupled thanks to their exposed APIs, which can be continuously integration tested. With microservices ' continuous release cycle, changes are small and developers can rapidly exploit them with a regression test, then go over them and fix the defects found, reducing the risk of a flawed deployment. This results in higher velocity with a lower associated risk.

Owing to the separation of functionality and the single responsibility principle, microservices make teams very productive. You can find a number of examples online where large projects have been developed with very low team sizes, such as 8 to 10 developers.

Developers can have better focus with smaller code bases and better feature implementation, leading to a higher empathetic relationship with the users of the product. This conduces better motivation and clarity in feature implementation. An empathetic relationship with users allows for a shorter feedback loop and better and speedier prioritization of the feature pipeline. A shorter feedback loop also makes defect detection faster.

Each microservices team works independently and new features or ideas can be implemented without being coordinated with larger audiences. The implementation of endpoint failure handling is also easily achieved in the microservices design.

At a recent conference, a team demonstrated how they had developed a microservices -based transport-tracking application for iOS and Android, within 10 weeks, with Uber-type tracking features. A big consulting firm gave a seven-month estimation for this application to its client. This shows how the microservices design is aligned with agile methodologies and CI/CD.

So far, we have discussed only the microservices design—there are also nanoservices, teraservices, and serverless designs to explore.

Microservices that are especially small or fine-grained are called nanoservices. A nanoservices pattern is really an  anti-pattern .

In the case of nanoservices, overheads such as communication and maintenance activities outweigh its utility. Nanoservices should be avoided. An example of a nanoservices (anti-) pattern would be creating a separate service for each database table and exposing its CRUD operation using events or a REST API.

Teraservices are the opposite of microservices. The teraservices design entails a sort of a monolithic service. Teraservices require two terabytes of memory, or more. These services could be used when services are required only to be in memory and have high usage.

These services are quite costly in cloud environments due to the memory needed, but the extra cost can be offset by changing from quad-core servers to dual-core servers.

Such a design is not popular.

Serverless is another popular cloud architecture offered by cloud platforms such as AWS. There are servers, but they are managed and controlled by cloud platforms.

This architecture enables developers to simply focus on code and implementing functionality. Developers need not worry about scale or resources (for instance, OS distributions as with Linux, or message brokers such as RabbitMQ ) as they would with coded services.

A serverless architecture offers development teams the following features: zero administration, auto-scaling, pay-per-use schemes, and increased velocity. Because of these features, development teams just need to care about implementing functionality rather than the server and infrastructure.

CI and CD are important parts of today's development process. Therefore, having a proper pipeline for building, and for containerized delivery, is discussed in the following sub-sections.

Microservices can be built and tested using popular CI/CD tools, such as Jenkins and TeamCity. This is done very similarly to how a build is done in a monolithic application. In a microservices architecture, each microservice is treated like a small application.

For example, once you commit the code in the repository (SCM), CI/CD tools trigger the build process:

Then, release-build triggers, which change the SNAPSHOT or RELEASE version in pom.xml (in the case of Maven), build the artifacts as described in the normal build trigger, publish the artifacts to the artifacts repository, and tag the version in the repository. If you use the container image, then build the container image as a part of the build.

Because of the design of microservices , you need to have an environment that provides flexibility, agility, and smoothness for CI and CD as well as for shipment. M icroservice deployments need speed, isolation management, and an agile life cycle.

Products and software can also be shipped using an intermodal-container model. An intermodal container is a large standardized container, designed for intermodal freight transport. It allows cargo to use different modes of transport—truck, rail, or ship—without unloading and reloading. This is an efficient and secure way of storing and transporting goods. It resolves the problem of shipping, which previously had been a time-consuming, labor-intensive process, and repeated handling often broke fragile goods.

Shipping containers encapsulate their content. Similarly, software containers are starting to be used to encapsulate their content (such as products, applications, and dependencies).

Previously, Virtual Machines ( VMs ) were used to create software images that could be deployed where needed. Later, containerization engines such as Docker became more popular as they were compatible with both traditional virtual stations systems and cloud environments. For example, it is not practical to deploy more than a couple of VMs on a developer's laptop. Building and booting a VM is usually I/O intensive and consequently slow.

A container provides a lightweight runtime environment consisting of the core features of VMs and the isolated services of OSes. This makes the packaging and execution of microservices easy and smooth.

As the following diagram shows, a container runs as an application (microservice) within the OS. The OS sits on top of the hardware and each OS could have multiple containers, with one container running the application.

A container makes use of an OS' kernel interfaces, such as cnames and namespaces , which allow multiple containers to share the same kernel while running in complete isolation of one another. This gives the advantage of not having to complete an OS installation for each usage; the result is that the overhead is removed. This also makes optimal use of the hardware:

Container technology is one of the fastest growing technologies today, and Docker is leading it. Docker is an open source project and it was launched in 2013. 10,000 developers tried it after its interactive tutorial launched in August 2013. It was downloaded 2.75 million times by the time of the launch of its 1.0 release in June 2013. Many large companies have signed a partnership agreement with Docker, such as Microsoft, Red Hat, HP, OpenStack, and service providers such as AWS, IBM, and Google.

As we mentioned earlier, Docker also makes use of Linux kernel features, such as cgroups and namespaces, to ensure resource isolation and the packaging of the application with its dependencies. This packaging of dependencies enables an application to run as expected across different Linux OSes/distributions, supporting a level of portability. Furthermore, this portability allows developers to develop an application in any language and then easily deploy it from a laptop to a test or production server.

Containers are comprised of just the application and its dependencies, including the basic OS. This makes the application lightweight and efficient in terms of resource utilization. Developers and system administrators are interested in a container's portability and efficient resource utilization.

Everything in a Docker container executes natively on the host and uses the host kernel directly. Each container has its own user namespace.

As specified on the Docker documentation, Docker architecture uses a client-server architecture. The Docker client is basically a user interface that is used by an end user; clients communicate back and forth with a Docker daemon. The Docker daemon does the heavy lifting of the building, running, and distributing of your Docker containers. The Docker client and the daemon can run on the same system or on different machines.

The Docker client and daemon communicate via sockets or through a RESTful API. Docker registers are public or private Docker image repositories from which you upload or download images; for example, Docker Hub ( hub.docker.com ) is a public Docker registry.

The primary components of Docker are the following:

For more information, you can take a look at the overview of Docker that is provided by Docker ( https://docs.docker.com/engine/docker-overview/ ).

Microservices deployment with Docker involves three things:

The preceding information will help you to understand the basics of Docker. You will learn more about Docker and its practical usage in Chapter 4 , Implementing a Microservice . For more information, refer to https://docs.docker.com .

In this chapter, you have learned about or recapped the high-level design of large software projects, from traditional monolithic applications to microservices-based applications. You were also introduced to a brief history of microservices , the limitations of monolithic applications, and the benefits and flexibility that microservices offer. I hope this chapter helped you to understand the common problems faced in a production environment by monolithic applications and how microservices can resolve such problems. You were also introduced to lightweight and efficient Docker containers and saw how containerization is an excellent way to simplify microservices deployment.

In the next chapter, you will learn about setting up a development environment, looking at everything from your IDE and other development tools, to different libraries. We will deal with creating basic projects and setting up a Spring Boot configuration to build and develop our first microservice. We will be using Java 11Â as the language and Spring Boot for our project.

This chapter focuses on the development environment setup and configurations. If you are familiar with the tools and libraries, you could skip this chapter and continue with Chapter 3 , Domain-Driven Design , where you can explore domain-driven design ( DDD ).

This chapter will cover the following topics:

This book will use only the open source tools and frameworks for examples and code. This book will also use Java 11 as its programming language, while the application framework will be based on the Spring Framework. It will also make use of Spring Boot for developing microservices.

Eclipse, IntelliJ IDEA, and NetBeans' Integrated Development Environment ( IDE ) provide state-of-the-art support for both Java and JavaScript, and is sufficient for our needs. These have evolved a lot over the years and have built-in support for most of the technologies used by this book, including Maven, and Spring Boot. Therefore, I would recommend using any of these IDEs. You are, however, better off using IDEs that support Java 11.

We will use Spring Boot to develop the REST services and microservices. Opting for the most popular offering of Spring Framework, Spring Boot, or its subset, Spring Cloud, in this book was a conscious decision. Because of this, we don't need to write applications from scratch and it provides the default configuration for most of the technologies used in cloud applications. A Spring Boot overview is provided in Spring Boot's configuration section. If you are new to Spring Boot, this would definitely help you.

We will use Maven as our build tool. As with the IDE, you can use whichever build tool you want; for example, Gradle, or Ant with Ivy. We will use an embedded Jetty server as our web server, but another alternative is to use an embedded Tomcat web server. We will also use the Postman extension of Chrome for testing our REST services.

We will start with Spring Boot configurations. You can either create fresh new projects or import the project (Maven) using source code hosted on GitHub.

Spring Boot is an obvious choice for developing state-of-the-art, production-ready applications specific to Spring. Its website ( https://projects.spring.io/spring-boot/ ) also states its real advantages

Spring Boot is an amazing Spring tool created by Pivotal that was released in April 2014 (GA). It was developed based on the request of SPR-9888 ( https://jira.spring.io/browse/SPR-9888 ) with the title, Improved support for 'containerless' web application architectures .

You must be wondering: Why containerless? Because, today's cloud environment, or PaaS, provides most of the features offered by container-based web architectures, such as reliability, management, or scaling. Therefore, Spring Boot focuses on making itself an ultralight container.

Spring Boot is preconfigured to make production-ready web applications very easily. Spring Initializr ( http://start.spring.io ) is a page where you can select build tools, such as Maven or Gradle, along with project metadata, such as group, artifact, and dependencies. Once you feed the required fields, you can just click on the Generate Project button, which will give you the Spring Boot project that you can use for your production application.

On this page, the default Packaging option is Jar . We'll also use JAR packaging for our microservices development. The reason is very simple: it makes microservices development easier. Just think how difficult it would be to manage and create an infrastructure where each microservice runs on its own server instance.

Josh Long shared the following in his talk on one of the Spring IOs:

Later, we will use Spring Cloud, which is a wrapper on top of Spring Boot.

We will develop a sample REST application that will use the Java 9 module feature. We will create two modules— lib and rest . The lib module will provide the models or any supported classes to the rest module. The rest module will include all the classes that are required to develop the REST application and it will also consume the model classes defined in the lib module.

Both the lib and rest modules are maven modules, and their parent module is our main project, 6392_chapter2 .

The module-info.java file is an important class that governs the access of its classes. We'll make use of requires , opens , and exports to use the spring modules and establish the provider-consumer relationship between the lib and rest modules of our REST application.

We will use Java 11 to develop microservices. Therefore, we'll use the latest Spring Framework and Spring Boot project. At the time of writing, Spring Boot 2.1.0 M2 release version is available.

You can use the latest released version. Spring Boot 2.1.0 M2 snapshot uses Spring 5 (5.1.0 M2 release).

Now, let's take a look at the following steps and learn about adding Spring Boot to our main project:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0

http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.packtpub.mmj</groupId>

<artifactId>11537_chapter2</artifactId>

<version>1.0-SNAPSHOT</version>

<packaging>pom</packaging>

<modules>

<module>lib</module>

<module>rest</module>

</modules>

<properties>

<project.build.sourceEncoding>UTF-8

</project.build.sourceEncoding>

<maven.compiler.source>11</maven.compiler.source>

<maven.compiler.target>11</maven.compiler.target>

<start-class>com.packtpub.mmj.rest.RestSampleApp

</start-class>

</properties>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.1.0.M2</version>

</parent>

<dependencyManagement>

<dependencies>

<dependency>

<groupId>com.packtpub.mmj</groupId>

<artifactId>rest</artifactId>

<version>${project.version}</version>

</dependency>

<dependency>

<groupId>com.packtpub.mmj</groupId>

<artifactId>lib</artifactId>

<version>${project.version}</version>

</dependency>

</dependencies>

</dependencyManagement>

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

<executions>

<execution>

<goals>

<goal>repackage</goal>

</goals>

<configuration>

<classifier>exec</classifier>

<mainClass>${start-class}</mainClass>

</configuration>

</execution>

</executions>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<configuration>

<release>11</release>

<source>1.11</source>

<target>1.11</target>

<executable>${JAVA_1_11_HOME}/bin/javac</executable>

<showDeprecation>true</showDeprecation>

<showWarnings>true</showWarnings>

</configuration>

<dependencies>

<dependency>

<groupId>org.ow2.asm</groupId>

<artifactId>asm</artifactId>

<version>6.2</version>

<!-- Use newer version of ASM -->

</dependency>

</dependencies>

</plugin>

</plugins>

</build>

<repositories>

<repository>

<id>spring-snapshots</id>

<name>Spring Snapshots</name>

<url>https://repo.spring.io/snapshot</url>

<snapshots>

<enabled>true</enabled>

</snapshots>

</repository>

<repository>

<id>spring-milestones</id>

<name>Spring Milestones</name>

<url>https://repo.spring.io/milestone</url>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

</repositories>

<pluginRepositories>

<pluginRepository>

<id>spring-snapshots</id>

<name>Spring Snapshots</name>

<url>https://repo.spring.io/snapshot</url>

<snapshots>

<enabled>true</enabled>

</snapshots>

</pluginRepository>

<pluginRepository>

<id>spring-milestones</id>

<name>Spring Milestones</name>

<url>https://repo.spring.io/milestone</url>

<snapshots>

<enabled>false</enabled>

</snapshots>

</pluginRepository>

</pluginRepositories>

</project>

You can observe that we have defined our two modules, lib and rest , in the pom.xml parent project.

The preceding steps will download all the required dependencies from a remote Maven repository if the declared dependencies and transitive dependencies are not available in a local Maven repository. If you are downloading the dependencies for the first time, then it may take a bit of time, depending on your internet speed.

Spring Boot adopts the simplest approach to building a standalone application that runs on an embedded web server. It creates an executable archive (JAR) file that contains everything, including an entry point defined by a class that contains the main() method. For making it an executable JAR file, you use Spring's support for embedding the Jetty servlet container as the HTTP runtime, instead of deploying it to an external instance for execution.

Therefore, we would create the executable JAR file in place of the WAR that needs to be deployed on external web servers, which is a part of the rest module. We'll define the domain models in the lib module and API related classes in the rest module.

We need to create separate pom.xml files for the lib and rest modules, respectively.

The pom.xml file of the lib module is as follows:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>com.packtpub.mmj</groupId>

<artifactId>11537_chapter2</artifactId>

<version>1.0-SNAPSHOT</version>

</parent>

<artifactId>lib</artifactId>

</project>

The pom.xml file of the rest module is as follows:

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>com.packtpub.mmj</groupId>

<artifactId>11537_chapter2</artifactId>

<version>1.0-SNAPSHOT</version>

</parent>

<artifactId>rest</artifactId>

<dependencies>

<dependency>

<groupId>com.packtpub.mmj</groupId>

<artifactId>lib</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

...

...

Here, the spring-boot-starter-web dependency is used for developing the standalone executable REST service.

Now, we need to define modules using the module-info.java class in the lib and rest modules in their default package, respectively.

The module-info.java file in the lib module is as follows:

module com.packtpub.mmj.lib {

exports com.packtpub.mmj.lib.model to com.packtpub.mmj.rest;

opens com.packtpub.mmj.lib.model;

}

Here, we are exporting the com.packtpub.mmj.lib.model package to com.packtpub.mmj.rest , which allows access on the part of the lib model classes to the rest module classes.

The module-info.java file in the lib module is as follows :

module com.packtpub.mmj.rest {

requires spring.core;

requires spring.beans;

requires spring.context;

requires spring.aop;

requires spring.web;

requires spring.expression;

requires spring.boot;

requires spring.boot.autoconfigure;

requires com.packtpub.mmj.lib;

exports com.packtpub.mmj.rest;

exports com.packtpub.mmj.rest.resources;

opens com.packtpub.mmj.rest;

opens com.packtpub.mmj.rest.resources;

}

Here, module definition contains all the requisite spring  modules and our own created com.packtpub.mmj.lib packages by using the requires statement. This allows rest module classes to use classes defined in the spring modules and the newly created lib module. Also, we're exporting and opening the com.packt.mmj.rest and com.packt.mmj.rest.resources packages.

Now, as you are ready regarding which module to utilize, you can create a sample web service. You will create a math API that performs simple calculations and generates the response in JSON format.

Let's discuss how we can call and get responses from REST services.

The service will handle the GET requests for /calculation/sqrt or /calculation/power , and so on. The GET request should return a 200 OK response with JSON in the body that represents the square root of a given number. It should look something like this:

{

"function": "sqrt",

"input": [

"144"

],

"output": [

"12.0"

]

}

The input field is the input parameter for the square root function, and the content is the textual representation of the result.

You could create a resource representation class to model the representation by using Plain Old Java Object ( POJO ) with fields, constructors, setters, and getters for the input, output, and function data. Since it is a model, we'll create it in the lib module:

package com.packtpub.mmj.lib.model;

import java.util.List;

public class Calculation {

String function;

private List<String> input;

private List<String> output;

public Calculation(List<String> input,

List<String> output, String function) {

this.function = function;

this.input = input;

this.output = output;

}

public List<String> getInput() {

return input;

}

public void setInput(List<String> input) {

this.input = input;

}

public List<String> getOutput() {

return output;

}

public void setOutput(List<String> output) {

this.output = output;

}

public String getFunction() {

return function;

}

public void setFunction(String function) {

this.function = function;

}

}

You could say that Roy Fielding is the father of Representational State Transfer ( REST ), given that he had defined this term in his doctoral dissertation. REST is a style of software architecture that amazingly utilizes the existing HTTP/S protocols. RESTful systems comply with REST architecture properties, principles, and constraints.

Now, you'll create a REST controller to handle the Calculation resource. The REST controller class handles the HTTP requests in the Spring RESTful web service implementation.

@RestController is a class-level annotation used for the resource class introduced in Spring 4. It is a combination of @Controller and @ResponseBody , and because of it, a class returns a domain object instead of a view.

In the following code, you can see that the CalculationController class handles GET requests for /calculation by returning a new instance of the calculation class.

We will implement two URIs for a Calculation resource—the square root (the Math.sqrt() function) as the /calculations/sqrt URI, and power (the Math.pow() function) as the /calculation/power URI.

The @RequestMapping annotation is used at class level to map the /calculation URI to the CalculationController class; that is, it ensures that the HTTP request to /calculation is mapped to the CalculationController class. Based on the path defined using the @RequestMapping annotation of the URI (postfix of /calculation , for example, /calculation/sqrt/144 ), it would be mapped to the respective methods. Here, the request mapping, /calculation/sqrt , is mapped to the sqrt() method, and /calculation/power is mapped to the pow() method.

You might have also observed that we have not defined what request method ( GET / POST / PUT , and so on) these methods would use. The @RequestMapping annotation maps all the HTTP request methods by default. You could use specific methods by using the method property of RequestMapping . For example, you could write a @RequestMethod annotation in the following way to use the POST method:

@RequestMapping(value = "/power", method = POST)

For passing the parameters along the way, the sample demonstrates both request parameters and path parameters using the @RequestParam and @PathVariable annotations, respectively.

@RequestParam is responsible for binding the query parameter to the parameter of the controller's method. For example, the QueryParam base and exponent are bound to parameters b and e of the pow() method of CalculationController , respectively. Both of the query parameters of the pow() method are required, since we are not using any default value for them. Default values for query parameters could be set using the defaultValue property of @RequestParam , for example, @RequestParam(value="base", defaultValue="2") . Here, if the user does not pass the query parameter base, then the default value 2 would be used for the base.

If no defaultValue is defined, and the user doesn't provide the request parameter, then RestController returns the HTTP status code 400 with the message Required String parameter 'base' is not present . It always uses the reference of the first parameter required if more than one of the request parameters is missing:

{

"timestamp": 1464678493402,

"status": 400,

"error": "Bad Request",

"exception": "org.springframework.web.bind.MissingServletRequestParameterException",

"message": "Required String parameter 'base' is not present",

"path": "/calculation/power/"

}

@PathVariable helps you to create the dynamic URIs. The @PathVariable annotation allows you to map Java parameters to a path parameter. It works with @RequestMapping , where the placeholder is created in a URI, and then the same placeholder name is used either as a PathVariable or a method parameter, as you can see in the CalculationController class method sqrt() . Here, the value placeholder is created inside the @RequestMapping annotation and the same value is assigned to the value of the @PathVariable .

The sqrt() m ethod takes the parameter in the URI in place of the request parameter, for example, http://localhost:8080/calculation/sqrt/144 . Here, the 144 value is passed as the path parameter and this URL should return the square root of 144 , which is 12 .

To use the basic check in place, we use the regular expression, "^-?+\\d+\\.?+\\d*$" , to allow only valid numbers in parameters.

If non-numeric values are passed, the respective method adds an error message to the output key of the JSON:

There are other alternatives, such as adding a slash at the end ( /path/{variable}/ ), or overriding the confi gurePathMatch() method of WebMvcConfigurerAd apter by setting the useRegisteredSuffixPatternMatch to true , using PathMatchConfigurer (available in Spring 4.0.1+).

The following is the code of the CalculationController resource, where we have implemented two REST endpoints:

package com.packtpub.mmj.rest.resources;

import com.packtpub.mmj.lib.model.Calculation;

import java.util.ArrayList;

import java.util.List;

import org.springframework.web.bind.annotation.PathVariable;

import org.springframework.web.bind.annotation.RequestMapping;

import static org.springframework.web.bind.annotation.RequestMethod.GET;

import org.springframework.web.bind.annotation.RequestParam;

import org.springframework.web.bind.annotation.RestController;

/**

*

* @author sousharm

*/

@RestController

@RequestMapping("calculation")

public class CalculationController {

private static final String PATTERN = "^-?+\\d+\\.?+\\d*$";

/**

*

* @param b

* @param e

* @return

*/

@RequestMapping("/power")

public Calculation pow(@RequestParam(value = "base") String b, @RequestParam(value = "exponent") String e) {

List<String> input = new ArrayList();

input.add(b);

input.add(e);

List<String> output = new ArrayList();

String powValue;

if (b != null && e != null && b.matches(PATTERN) && e.matches(PATTERN)) {

powValue = String.valueOf(Math.pow(Double.valueOf(b), Double.valueOf(e)));

} else {

powValue = "Base or/and Exponent is/are not set to numeric value.";

}

output.add(powValue);

return new Calculation(input, output, "power");

}

/**

*

* @param aValue

* @return

*/

@RequestMapping(value = "/sqrt/{value:.+}", method = GET)

public Calculation sqrt(@PathVariable(value = "value") String aValue) {

List<String> input = new ArrayList<>();

input.add(aValue);

List<String> output = new ArrayList<>();

String sqrtValue;

if (aValue != null && aValue.matches(PATTERN)) {

sqrtValue = String.valueOf(Math.sqrt(Double.valueOf(aValue)));

} else {

sqrtValue = "Input value is not set to numeric value.";

}

output.add(sqrtValue);

return new Calculation(input, output, "sqrt");

}

}

Here, we are exposing only the power and sqrt functions for the Calculation resource using the /calculation/power and /calculation/sqrt URIs.

One interesting thing here is that due to Spring's HTTP message converter support, the Calculation object gets converted to JSON automatically. You don't need to do this conversion manually. If Jackson 2 is on the classpath, Spring's MappingJackson2HttpMessageConverter converts the Calculation object to JSON.

Create a RestSampleApp class using the SpringBootApplication annotation. The main() method uses Spring Boot's SpringApplication.run() method to launch an application.

We will annotate the RestSampleApp class with the @SpringBootApplication annotation that adds all of the following tags implicitly:

package com.packtpub.mmj.rest;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

@SpringBootApplication

public class RestSampleApp {

public static void main(String[] args) {

SpringApplication.run(RestSampleApp.class, args);

}

}

This web application is 100 percent pure Java and you don't have to deal with configuring any plumbing or infrastructure using XML; instead, it uses the Java annotation that is made even simpler by Spring Boot. Therefore, there wasn't a single line of XML, except pom.xml for Maven; there wasn't even a web.xml file.

Spring Boot, by default, provides Apache Tomcat as an embedded application container. This book will use the Jetty-embedded application container in place of Apache Tomcat. Therefore, we need to add a Jetty application container dependency to support the Jetty web server.

Jetty also allows you to read keys or trust stores using classpaths; that is, you don't need to keep these stores outside the JAR files. If you use Tomcat with SSL, then you will need to access the key store or trust store directly from the filesystem, but you can't do that using the classpath. The result is that you can't read a key store or a trust store within a JAR file because Tomcat requires that the key store (and trust store if you're using one) is directly accessible on the filesystem. This situation may change after this book has been written.

This limitation doesn't apply to Jetty, which allows the reading of keys or trust stores within a JAR file. A relative section on the pom.xml file of the rest module is as follows:

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

<exclusions>

<exclusion>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-tomcat</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-jetty</artifactId>

</dependency>

</dependencies>

Maven's pom.xml build file contains the description that would allow the REST sample service code to compile, build, and execute. It packages the executable code inside a JAR file. We can choose one of the following options to execute the packaged executable JAR file:

The following sections will cover them in detail.

All popular IDEs, such as Eclipse, Netbeans, and IntelliJ IDEA, support Java 11 and Spring. You can use any of the preferred IDEs having Java 11 support.

Here, we use the Maven executable to package the generated JAR file. The steps for this are as follows:

Â

Please make sure that Java and JAVA_HOME is set to Java 11 before executing the following commands.

Observe the following steps:

mvn clean package

java -jar rest\target\rest-1.0-SNAPSHOT-exec.jar

This book uses the Postman tool for REST service testing. I have used the 6.2.5 version of Postman.

Let's test our first REST resource once you have the Postman—REST client installed. We start the Postman—REST client from either the Start menu or from a shortcut.

By default, the embedded web server starts on port 8080 . Therefore, we need to use the http://localhost:8080/<resource> URL for accessing the sample REST application, for example, http://localhost:8080/calculation/sqrt/144 .

Once it's started, you can type the Calculation REST URL for sqrt and the value 144 as the path parameter. You can see it in the following screenshot. This URL is entered in the URL (enter request URL here) input field of the Postman extension. By default, the request method is GET . We use the default value for the request method, as we have also written our RESTful service to serve the request GET method.

Once you are ready with your input data as mentioned earlier, you can submit the request by clicking the Send button. You can see in the following screenshot that the response code 200 is returned by your sample REST service. You can find the Status label in the following screenshot to view the 200 OK code. A successful request also returns the JSON data of the Calculation resource, shown in the Pretty tab in the screenshot.

The returned JSON shows the sqrt method value of the function key. It also displays 144 and 12.0 as the input and output lists, respectively:

Similarly, we also test our sample REST service for calculating the power function. We input the following data in the Postman extension:

Here, we are passing the request parameters, base and exponent , with values of 2 and 4 , respectively. This returns the following JSON:

{

"function": "power",

"input": [

"2",

"4"

],

"output": [

"16.0"

]

}

This returns the preceding JSON with a response status of 200 OK, as shown in the following screenshot:

In the following table, all the URLs start with http://localhost:8080 :

|

URL |

JSON output |

|

/calculation/sqrt/12344.234 |

{

"function": "sqrt",

"input": [

"12344.234"

],

"output": [

"111.1046083652699"

]

}

|

|

The /calculation/sqrt/-9344.34 of the Math.sqrt function's special scenario: If the argument is NaN or less than zero, then the result is NaN . |

{

"function": "sqrt",

"input": [

"-9344.34"

],

"output": [

"NaN"

]

}

|

|

/calculation/power?base=2.09&exponent=4.5 |

{

"function": "power",

"input": [

"2.09",

"4.5"

],

"output": [

"27.58406626826615"

]

}

|

|

/calculation/power?base=-92.9&exponent=-4 |

{

"function": "power",

"input": [

"-92.9",

"-4"

],

"output": [

"1.3425706351762353E-8"

]

}

|

Similarly, you could also perform some negative scenarios, as shown in the following table. In this table, all the URLs start with http://localhost:8080 :

|

URL |

JSON output |

|

/calculation/power?base=2a&exponent=4 |

{

"function": "power",

"input": [

"2a",

"4"

],

"output": [

"Base or/and Exponent is/are not set to numeric value."

]

}

|

|

/calculation/power?base=2&exponent=4b |

{

"function": "power",

"input": [

"2",

"4b"

],

"output": [

"Base or/and Exponent is/are not set to numeric value."

]

}

|

|

/calculation/power?base=2.0a&exponent=a4 |

{

"function": "power",

"input": [

"2.0a",

"a4"

],

"output": [

"Base or/and Exponent is/are not set to numeric value."

]

}

|

|

/calculation/sqrt/144a |

{

"function": "sqrt",

"input": [

"144a"

],

"output": [

"Input value is not set to numeric value."

]

}

|

|

/calculation/sqrt/144.33$ |

{

"function": "sqrt",

"input": [

"144.33$"

],

"output": [

"Input value is not set to numeric value."

]

}

|

In this chapter, you have explored various aspects of setting up a development environment, Maven configurations, Spring Boot configurations, and so on.

You have also learned how to make use of Spring Boot to develop a sample REST service application. We learned how powerful Spring Boot is—it eases development so much that you only have to worry about the actual code, and not about the boilerplate code or configurations that you write. We have also packaged our code into a JAR file with an embedded application container Jetty. This allows it to run and access the web application without worrying about the deployment.

In the next chapter, you will learn about domain-driven design ( DDD ) using a sample project that can be used across the remainder of the chapters. We'll use the online table reservation system ( OTRS ) sample project to go through various phases of microservices development and understand the DDD. After completing Chapter 3 , Domain-Driven Design, you will learn the fundamentals of DDD.

You will understand how to use the DDD by design sample services in practical terms. You will also learn to design the domain models and REST services on top of it.

The following are a few links that you can take a look at in order to learn more about the tools we used here:

This chapter sets the tone for the rest of the chapters by referring to one sample project. The sample project will be used to explain different microservice concepts from here onward. This chapter uses this sample project to drive through different combinations of functional and domain services or applications to explain domain-driven design ( DDD ). It will help you to learn the fundamentals of DDD and its practical usage. You will also learn about concepts related to the design of domain models using REST services.

This chapter covers the following topics:

Good software design is as much the key to the success of a product or service as the functionalities offered by it, adding equal weight to the success of the product; for example, Amazon provides a shopping platform, but its architecture design makes it different from other similar sites and contributes to its success. This shows how important a software or architecture design is to the success of a product/service. DDD is a software design practice, and we'll explore it with various theories and practical examples.

DDD is a key design practice that can be used to design the microservices of the product that you are developing. Therefore, we'll first explore DDD, before jumping into the development of microservices. After studying this chapter, you will understand the importance of DDD for microservices development.

An enterprise, or cloud application, solves business problems and other real-world problems. These problems cannot be resolved without knowledge of the particular domain. For example, you cannot provide a software solution for a financial system such as online stock trading if you don't understand stock exchanges and how they function. Therefore, having domain knowledge is a must for solving problems. Now, if you want to offer a solution such as software or an application, you need to have some domain knowledge to design it. Combining the domain and software design is a software design methodology known as DDD.

When we develop software to implement real-world scenarios offering the functionalities needed for a domain, we create a model of that domain. A model is an abstraction, or a blueprint, of the domain.

Designing this model is not rocket science, but it does take a lot of effort, refinement, and input from domain experts. This is the collective job of software designers, domain experts, and developers. They organize information, divide it into smaller parts, group them logically, and create modules. Each module can be taken up individually, and can be divided using a similar approach. This process can be followed until we reach the unit level, or until we cannot divide it any further. A complex project may have more such iterations; similarly, a simple project could have just a single iteration.

Once a model has been defined and well-documented, it can move onto the next stage—code design. So, here, we have a software design —a domain model and code design, and code implementation of the domain model. The domain model provides a high-level view of the architecture of a solution (software/application), and the code implementation gives the domain model a life, as a working model.

DDD makes design and development work together. It provides the ability to develop software continuously, while keeping the design up to date based on feedback received on the development. It solves one of the limitations of the agile and waterfall methodologies, making software maintainable, including its design and code, as well as keeping application minimum viable ( minimum viable product — MVP ).

Design-driven development involves a developer right from the initial stage, and software designers discuss the domain with domain experts at all meetings during the modeling process . This gives developers the right platform to understand the domain, and provides the opportunity to share early feedback on the domain model implementation. It removes the bottleneck that appears in later stages when stockholders wait for deliverables.

The fundamentals of DDD can broadly be categorized into two parts—building blocks, and strategic design and principles. These can be further categorized into different parts, shown as follows:

The following subsections explain the usage and importance of the building blocks of DDD.